Table Of Contents

In a recent move that has sparked privacy concerns, LinkedIn, the professional networking platform owned by Microsoft, has been using user data to train generative AI models without explicitly obtaining user consent. This revelation came amid an update to the platform’s privacy policy, which introduced new settings allowing users to opt-out of data use for AI model training. However, the update has left many questioning the extent of control they truly have over their data.

The rise of artificial intelligence (AI) in various industries has prompted many companies to seek vast amounts of user-generated data to optimize their machine learning and AI models. While AI advancements promise innovation and efficiency, the collection of personal data without explicit permission raises significant ethical and legal questions. LinkedIn’s recent actions mirror a broader trend in tech, where data privacy and AI innovation are increasingly at odds.

LinkedIn’s Updated AI Data Policies and Opt-Out Options

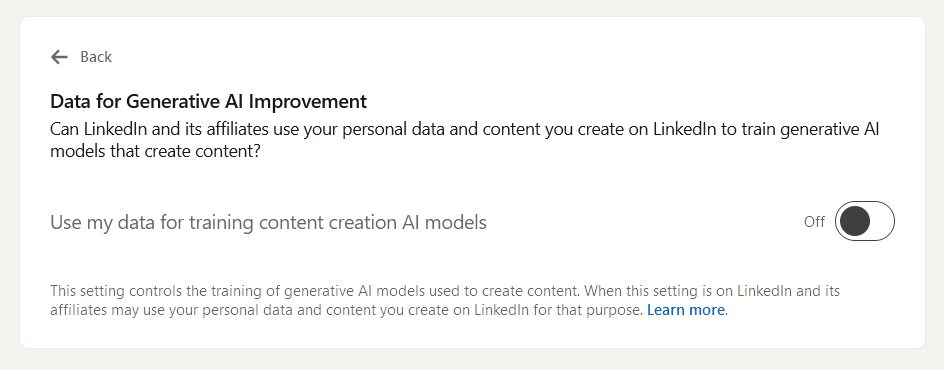

LinkedIn’s updated privacy policy now explicitly states that personal data from the platform may be used for product development, service improvement, and, notably, the training of AI models. According to LinkedIn, this includes generative AI technologies, such as writing assistance tools. The update has provided users with the option to opt out of having their data used for these AI training purposes by navigating to the Data Privacy tab in their account settings.

However, users are expressing concerns that the platform has already been using their data for AI training without their knowledge. LinkedIn clarified that opting out will prevent future data use for model training, but it will not reverse any past usage. This distinction has raised frustrations among users, especially those unaware that their data had been utilized in such a manner.

Interestingly, LinkedIn has also emphasized that they employ privacy-enhancing techniques, such as data anonymization and information removal, to minimize the inclusion of personal information in AI training datasets. Moreover, the platform has stated that it does not use data from individuals residing in the European Union, the European Economic Area, or Switzerland, likely due to the region’s stringent privacy regulations, such as the General Data Protection Regulation (GDPR).

AI and Data Ethics: A Growing Debate in Tech

The controversy surrounding LinkedIn’s use of personal data for AI training highlights a broader issue within the tech industry: the balance between innovation and user privacy. As AI models become more sophisticated, they require large datasets to learn and improve. For platforms like LinkedIn, which house vast amounts of professional data, the temptation to leverage this information for AI development is high.

However, this practice is not without its risks. The ethical use of AI, particularly in terms of data privacy, has been a hot topic in recent years. Companies like Meta have also faced scrutiny for similar practices, with reports suggesting the social media giant has been collecting non-private user data for AI training since as early as 2007. As more organizations incorporate AI into their services, the question of how they handle user data becomes ever more pressing.

For LinkedIn, the key concern is whether its users are sufficiently informed about how their data is being used. Transparency is crucial in maintaining user trust, particularly in the professional space where data security and privacy are paramount. While the platform’s introduction of an opt-out feature is a step in the right direction, it still leaves questions about the extent to which users can control their data.

What This Means for LinkedIn Users and the Future of AI

For LinkedIn users, the ability to opt-out of generative AI data usage offers some relief, but it also serves as a reminder that user data is a valuable commodity in today’s digital economy. As AI continues to evolve, platforms like LinkedIn will likely face increasing scrutiny over how they collect and use data, particularly in light of growing awareness about digital rights and privacy.

In the broader context, LinkedIn’s move could signal a shift in how major tech companies approach AI development. While AI has the potential to revolutionize industries, the means by which it is trained must be carefully managed to ensure ethical and legal standards are met. For now, users concerned about their privacy can take proactive steps to opt out of AI data usage, but this may only be a temporary solution.

In conclusion, the intersection of AI innovation and data privacy remains a complex landscape. LinkedIn’s recent changes underscore the importance of transparency and user control in the digital age. As AI technologies continue to advance, it is crucial that companies strike the right balance between leveraging data for innovation and respecting the privacy and rights of their users.