Table Of Contents

In recent years, the concept of autonomous weapons, often referred to as “slaughterbots,” has transitioned from the realm of science fiction to a pressing reality. These AI-powered weapons have sparked intense debates among technologists, ethicists, and policymakers. This article delves into the rise of autonomous weapons, the technology behind them, real-world examples, and the ethical and legal implications. We will also explore the future of warfare and whether humanity can control the spread of these potentially devastating tools.

The Rise of Autonomous Weapons: A New Era of Warfare

The advent of autonomous weapons marks a significant shift in modern warfare. Unlike traditional weapons, which require human intervention, autonomous weapons can identify, target, and engage without direct human control. This shift is driven by advancements in artificial intelligence (AI) and machine learning, which have enabled machines to perform complex tasks with increasing precision.

According to a report by the Stockholm International Peace Research Institute (SIPRI), global military spending reached nearly $2 trillion in 2020, with a significant portion allocated to developing AI and autonomous systems. This investment underscores the growing importance of these technologies in national defense strategies. However, the rise of autonomous weapons also raises critical questions about accountability, ethics, and the potential for misuse.

What Are Slaughterbots? Understanding the Concept

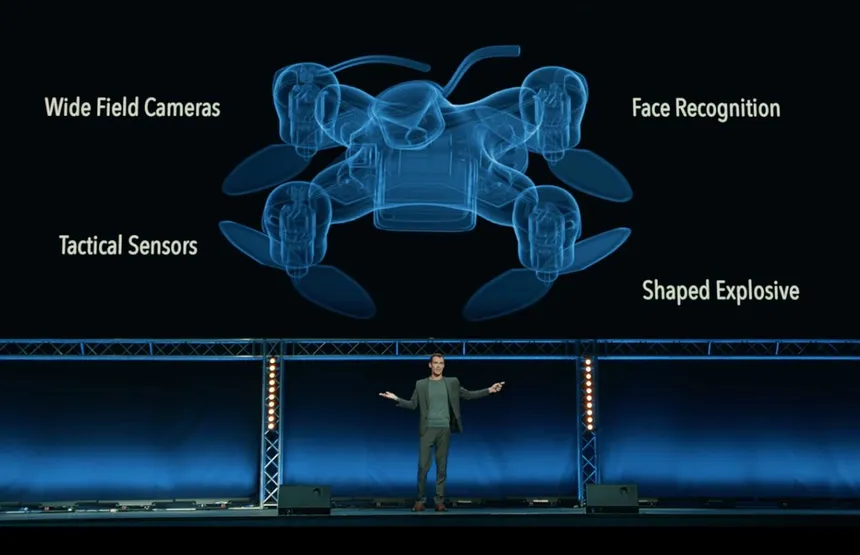

Slaughterbots, a term popularized by a viral video produced by the Future of Life Institute, refer to small, AI-powered drones designed to kill with precision. These devices can operate independently, using facial recognition and other sensors to identify and eliminate targets. The video, which depicts a dystopian future where slaughterbots are used for targeted assassinations, has sparked widespread concern and debate.

The concept of slaughterbots is not entirely fictional. In 2017, the United Nations held discussions on lethal autonomous weapons systems (LAWS), highlighting the urgent need for international regulations. The potential for these weapons to be used in warfare, terrorism, and even domestic policing has led to calls for a preemptive ban. Critics argue that the deployment of slaughterbots could lead to a new arms race, with devastating consequences for global security.

The Technology Behind AI-Powered Weapons

The technology that powers slaughterbots is a combination of AI, machine learning, and advanced robotics. AI algorithms enable these weapons to process vast amounts of data, recognize patterns, and make decisions in real-time. Machine learning allows them to improve their performance over time, adapting to new environments and threats.

One of the key components of AI-powered weapons is computer vision, which enables machines to interpret visual information. This technology is used in facial recognition systems, which can identify individuals with high accuracy. Additionally, AI-powered weapons often incorporate sensors and communication systems that allow them to coordinate with other devices and human operators. The integration of these technologies creates a powerful and potentially lethal tool.

Real-World Examples: Are Slaughterbots Already Here?

While fully autonomous slaughterbots may still be in the developmental stage, several real-world examples suggest that the technology is rapidly advancing. In 2020, the United States military deployed the MQ-9 Reaper drone, which can operate autonomously for certain tasks, such as surveillance and reconnaissance. Although human operators still control the drone’s lethal functions, the increasing autonomy of these systems is evident.

Another example is the Israeli Harpy drone, designed to detect and destroy radar systems autonomously. This loitering munition can operate without human intervention, making decisions based on pre-programmed criteria. These examples indicate that the line between semi-autonomous and fully autonomous weapons is becoming increasingly blurred.

Ethical and Legal Implications of Autonomous Weapons

The deployment of autonomous weapons raises profound ethical and legal questions. One of the primary concerns is accountability. If a slaughterbot makes a mistake or is used maliciously, who is responsible? The lack of clear accountability mechanisms could lead to a situation where no one is held liable for wrongful deaths or other harms.

From a legal perspective, the use of autonomous weapons challenges existing frameworks of international humanitarian law. The principles of distinction and proportionality, which require combatants to distinguish between military targets and civilians and to use force proportionately, may be difficult to uphold with autonomous systems. Human Rights Watch and other organizations have called for a ban on fully autonomous weapons, arguing that they cannot meet these legal standards.

The Future of Warfare: Can We Control the Spread of Slaughterbots?

As the technology behind autonomous weapons continues to evolve, the question of control becomes increasingly urgent. Can international regulations effectively prevent the proliferation of slaughterbots? Some experts argue that a preemptive ban, similar to the treaties on chemical and biological weapons, is necessary to prevent a new arms race.

However, others believe that regulation alone may not be sufficient. The dual-use nature of AI technology, which can be used for both civilian and military purposes, complicates efforts to control its spread. Additionally, non-state actors, such as terrorist organizations, could potentially develop or acquire autonomous weapons, further exacerbating the threat.

Conclusion

The rise of autonomous weapons represents a new era of warfare, with profound implications for global security, ethics, and law. While the technology behind slaughterbots is advancing rapidly, the ethical and legal frameworks needed to govern their use are still lagging. Real-world examples suggest that semi-autonomous systems are already in use, and fully autonomous weapons may not be far behind.

The future of warfare will likely be shaped by the decisions made today regarding the development and regulation of AI-powered weapons. A balanced approach, incorporating diverse perspectives and robust international cooperation, will be essential to ensure that the benefits of these technologies are realized while minimizing their risks. As we stand on the brink of this new era, the question remains: can we control the spread of slaughterbots, or will they control us?